diff --git a/README.md b/README.md

index b5e6a31..54c7a01 100644

--- a/README.md

+++ b/README.md

@@ -1,7 +1,7 @@

# privateGPT

Ask questions to your documents without an internet connection, using the power of LLMs. 100% private, no data leaves your execution environment at any point. You can ingest documents and ask questions without an internet connection!

-Built with [LangChain](https://github.com/hwchase17/langchain) and [GPT4All](https://github.com/nomic-ai/gpt4all)

+Built with [LangChain](https://github.com/hwchase17/langchain) and [GPT4All](https://github.com/nomic-ai/gpt4all) and [LlamaCpp](https://github.com/ggerganov/llama.cpp)

@@ -13,26 +13,35 @@ In order to set your environment up to run the code here, first install all requ

pip install -r requirements.txt

```

-Then, download the 2 models and place them in a folder called `./models`:

-- LLM: default to [ggml-gpt4all-j-v1.3-groovy.bin](https://gpt4all.io/models/ggml-gpt4all-j-v1.3-groovy.bin). If you prefer a different GPT4All-J compatible model, just download it and reference it in `privateGPT.py`.

-- Embedding: default to [ggml-model-q4_0.bin](https://huggingface.co/Pi3141/alpaca-native-7B-ggml/resolve/397e872bf4c83f4c642317a5bf65ce84a105786e/ggml-model-q4_0.bin). If you prefer a different compatible Embeddings model, just download it and reference it in `privateGPT.py` and `ingest.py`.

+Rename example.env to .env and edit the variables appropriately.

+```

+MODEL_TYPE: supports LlamaCpp or GPT4All

+PERSIST_DIRECTORY: is the folder you want your vectorstore in

+LLAMA_EMBEDDINGS_MODEL: Path to your LlamaCpp supported embeddings model

+MODEL_PATH: Path to your GPT4All or LlamaCpp supported LLM

+MODEL_N_CTX: Maximum token limit for both embeddings and LLM models

+```

+

+Then, download the 2 models and place them in a directory of your choice (Ensure to update your .env with the model paths):

+- LLM: default to [ggml-gpt4all-j-v1.3-groovy.bin](https://gpt4all.io/models/ggml-gpt4all-j-v1.3-groovy.bin). If you prefer a different GPT4All-J compatible model, just download it and reference it in your `.env` file.

+- Embedding: default to [ggml-model-q4_0.bin](https://huggingface.co/Pi3141/alpaca-native-7B-ggml/resolve/397e872bf4c83f4c642317a5bf65ce84a105786e/ggml-model-q4_0.bin). If you prefer a different compatible Embeddings model, just download it and reference it in your `.env` file.

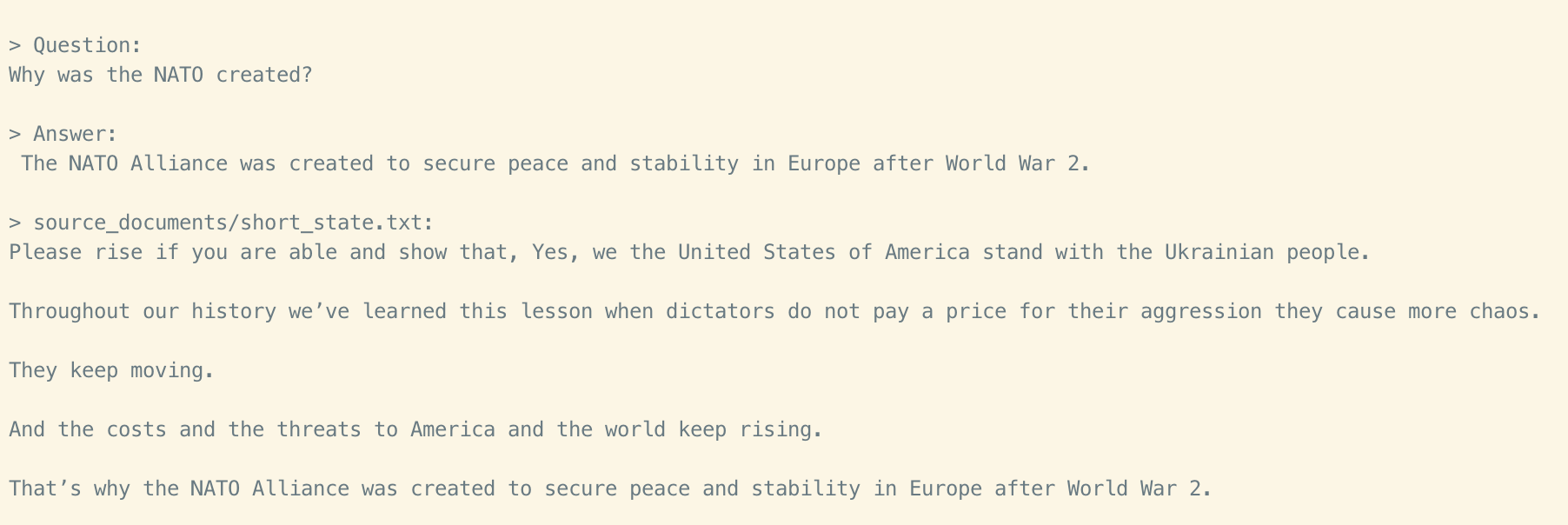

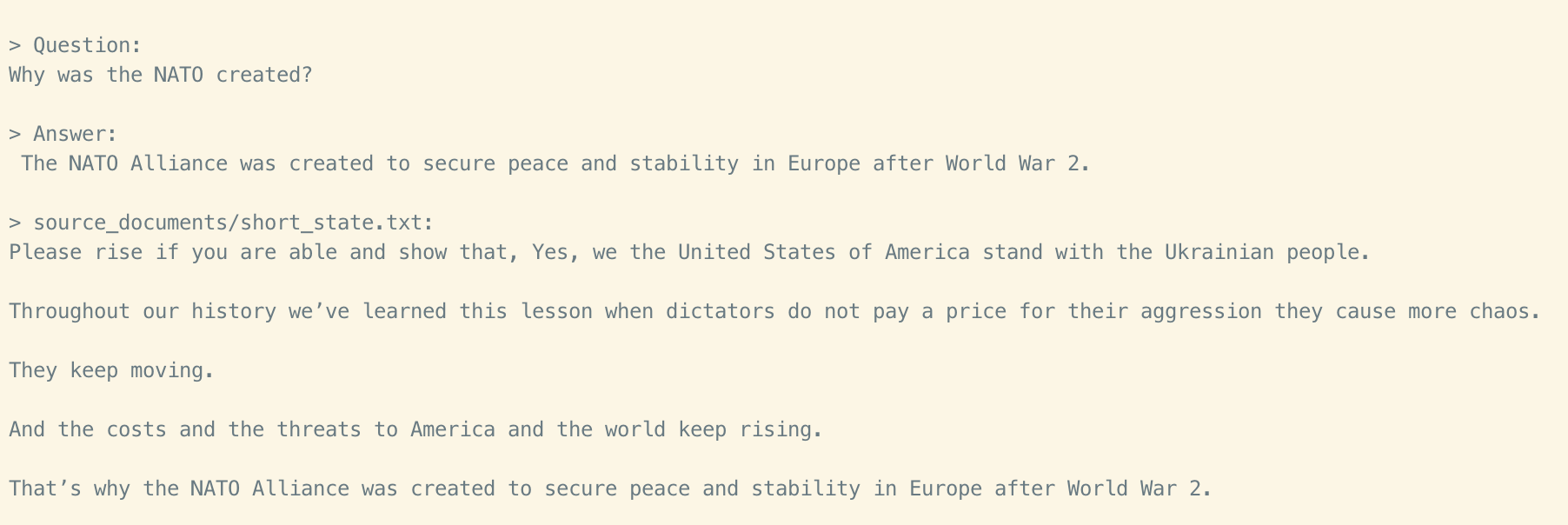

## Test dataset

This repo uses a [state of the union transcript](https://github.com/imartinez/privateGPT/blob/main/source_documents/state_of_the_union.txt) as an example.

## Instructions for ingesting your own dataset

-Get your .txt file ready.

+Put any and all of your .txt, .pdf, or .csv files into the source_documents directory

-Run the following command to ingest the data.

+Run the following command to ingest all the data.

```shell

-python ingest.py

+python ingest.py

```

-It will create a `db` folder containing the local vectorstore. Will take time, depending on the size of your document.

-You can ingest as many documents as you want by running `ingest`, and all will be accumulated in the local embeddings database.

-If you want to start from scratch, delete the `db` folder.

+It will create a `db` folder containing the local vectorstore. Will take time, depending on the size of your documents.

+You can ingest as many documents as you want, and all will be accumulated in the local embeddings database.

+If you want to start from an empty database, delete the `db` folder.

Note: during the ingest process no data leaves your local environment. You could ingest without an internet connection.

@@ -59,7 +68,7 @@ Type `exit` to finish the script.

Selecting the right local models and the power of `LangChain` you can run the entire pipeline locally, without any data leaving your environment, and with reasonable performance.

- `ingest.py` uses `LangChain` tools to parse the document and create embeddings locally using `LlamaCppEmbeddings`. It then stores the result in a local vector database using `Chroma` vector store.

-- `privateGPT.py` uses a local LLM based on `GPT4All-J` to understand questions and create answers. The context for the answers is extracted from the local vector store using a similarity search to locate the right piece of context from the docs.

+- `privateGPT.py` uses a local LLM based on `GPT4All-J` or `LlamaCpp` to understand questions and create answers. The context for the answers is extracted from the local vector store using a similarity search to locate the right piece of context from the docs.

- `GPT4All-J` wrapper was introduced in LangChain 0.0.162.

# Disclaimer

diff --git a/example.env b/example.env

new file mode 100644

index 0000000..149eca2

--- /dev/null

+++ b/example.env

@@ -0,0 +1,5 @@

+PERSIST_DIRECTORY=db

+LLAMA_EMBEDDINGS_MODEL=models/ggml-model-q4_0.bin

+MODEL_TYPE=GPT4All

+MODEL_PATH=models/ggml-gpt4all-j-v1.3-groovy.bin

+MODEL_N_CTX=1000

\ No newline at end of file

diff --git a/ingest.py b/ingest.py

index ac1921e..2a9a161 100644

--- a/ingest.py

+++ b/ingest.py

@@ -1,19 +1,29 @@

-from langchain.document_loaders import TextLoader

+import os

+from langchain.document_loaders import TextLoader, PDFMinerLoader, CSVLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import Chroma

from langchain.embeddings import LlamaCppEmbeddings

-from sys import argv

from constants import PERSIST_DIRECTORY

from constants import CHROMA_SETTINGS

def main():

+ llama_embeddings_model = os.environ.get('LLAMA_EMBEDDINGS_MODEL')

+ persist_directory = os.environ.get('PERSIST_DIRECTORY')

+ model_n_ctx = os.environ.get('MODEL_N_CTX')

# Load document and split in chunks

- loader = TextLoader(argv[1], encoding="utf8")

+ for root, dirs, files in os.walk("source_documents"):

+ for file in files:

+ if file.endswith(".txt"):

+ loader = TextLoader(os.path.join(root, file), encoding="utf8")

+ elif file.endswith(".pdf"):

+ loader = PDFMinerLoader(os.path.join(root, file))

+ elif file.endswith(".csv"):

+ loader = CSVLoader(os.path.join(root, file))

documents = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=50)

texts = text_splitter.split_documents(documents)

# Create embeddings

- llama = LlamaCppEmbeddings(model_path="./models/ggml-model-q4_0.bin")

+ llama = LlamaCppEmbeddings(model_path=llama_embeddings_model, n_ctx=model_n_ctx)

# Create and store locally vectorstore

db = Chroma.from_documents(texts, llama, persist_directory=PERSIST_DIRECTORY, client_settings=CHROMA_SETTINGS)

db.persist()

diff --git a/privateGPT.py b/privateGPT.py

index a95613b..38fab48 100644

--- a/privateGPT.py

+++ b/privateGPT.py

@@ -2,18 +2,32 @@ from langchain.chains import RetrievalQA

from langchain.embeddings import LlamaCppEmbeddings

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

from langchain.vectorstores import Chroma

-from langchain.llms import GPT4All

-from constants import PERSIST_DIRECTORY

+from langchain.llms import GPT4All, LlamaCpp

+import os

+

+llama_embeddings_model = os.environ.get("LLAMA_EMBEDDINGS_MODEL")

+persist_directory = os.environ.get('PERSIST_DIRECTORY')

+

+model_type = os.environ.get('MODEL_TYPE')

+model_path = os.environ.get('MODEL_PATH')

+model_n_ctx = os.environ.get('MODEL_N_CTX')

+

from constants import CHROMA_SETTINGS

-def main():

- # Load stored vectorstore

- llama = LlamaCppEmbeddings(model_path="./models/ggml-model-q4_0.bin")

- db = Chroma(persist_directory=PERSIST_DIRECTORY, embedding_function=llama, client_settings=CHROMA_SETTINGS)

+def main():

+ llama = LlamaCppEmbeddings(model_path=llama_embeddings_model, n_ctx=model_n_ctx)

+ db = Chroma(persist_directory=persist_directory, embedding_function=llama, client_settings=CHROMA_SETTINGS)

retriever = db.as_retriever()

# Prepare the LLM

callbacks = [StreamingStdOutCallbackHandler()]

- llm = GPT4All(model='./models/ggml-gpt4all-j-v1.3-groovy.bin', backend='gptj', callbacks=callbacks, verbose=False)

+ match model_type:

+ case "LlamaCpp":

+ llm = LlamaCpp(model_path=model_path, n_ctx=model_n_ctx, callbacks=callbacks, verbose=False)

+ case "GPT4All":

+ llm = GPT4All(model=model_path, n_ctx=model_n_ctx, backend='gptj', callbacks=callbacks, verbose=False)

+ case _default:

+ print(f"Model {model_type} not supported!")

+ exit;

qa = RetrievalQA.from_chain_type(llm=llm, chain_type="stuff", retriever=retriever, return_source_documents=True)

# Interactive questions and answers

while True:

@@ -13,26 +13,35 @@ In order to set your environment up to run the code here, first install all requ

pip install -r requirements.txt

```

-Then, download the 2 models and place them in a folder called `./models`:

-- LLM: default to [ggml-gpt4all-j-v1.3-groovy.bin](https://gpt4all.io/models/ggml-gpt4all-j-v1.3-groovy.bin). If you prefer a different GPT4All-J compatible model, just download it and reference it in `privateGPT.py`.

-- Embedding: default to [ggml-model-q4_0.bin](https://huggingface.co/Pi3141/alpaca-native-7B-ggml/resolve/397e872bf4c83f4c642317a5bf65ce84a105786e/ggml-model-q4_0.bin). If you prefer a different compatible Embeddings model, just download it and reference it in `privateGPT.py` and `ingest.py`.

+Rename example.env to .env and edit the variables appropriately.

+```

+MODEL_TYPE: supports LlamaCpp or GPT4All

+PERSIST_DIRECTORY: is the folder you want your vectorstore in

+LLAMA_EMBEDDINGS_MODEL: Path to your LlamaCpp supported embeddings model

+MODEL_PATH: Path to your GPT4All or LlamaCpp supported LLM

+MODEL_N_CTX: Maximum token limit for both embeddings and LLM models

+```

+

+Then, download the 2 models and place them in a directory of your choice (Ensure to update your .env with the model paths):

+- LLM: default to [ggml-gpt4all-j-v1.3-groovy.bin](https://gpt4all.io/models/ggml-gpt4all-j-v1.3-groovy.bin). If you prefer a different GPT4All-J compatible model, just download it and reference it in your `.env` file.

+- Embedding: default to [ggml-model-q4_0.bin](https://huggingface.co/Pi3141/alpaca-native-7B-ggml/resolve/397e872bf4c83f4c642317a5bf65ce84a105786e/ggml-model-q4_0.bin). If you prefer a different compatible Embeddings model, just download it and reference it in your `.env` file.

## Test dataset

This repo uses a [state of the union transcript](https://github.com/imartinez/privateGPT/blob/main/source_documents/state_of_the_union.txt) as an example.

## Instructions for ingesting your own dataset

-Get your .txt file ready.

+Put any and all of your .txt, .pdf, or .csv files into the source_documents directory

-Run the following command to ingest the data.

+Run the following command to ingest all the data.

```shell

-python ingest.py

+python ingest.py

```

-It will create a `db` folder containing the local vectorstore. Will take time, depending on the size of your document.

-You can ingest as many documents as you want by running `ingest`, and all will be accumulated in the local embeddings database.

-If you want to start from scratch, delete the `db` folder.

+It will create a `db` folder containing the local vectorstore. Will take time, depending on the size of your documents.

+You can ingest as many documents as you want, and all will be accumulated in the local embeddings database.

+If you want to start from an empty database, delete the `db` folder.

Note: during the ingest process no data leaves your local environment. You could ingest without an internet connection.

@@ -59,7 +68,7 @@ Type `exit` to finish the script.

Selecting the right local models and the power of `LangChain` you can run the entire pipeline locally, without any data leaving your environment, and with reasonable performance.

- `ingest.py` uses `LangChain` tools to parse the document and create embeddings locally using `LlamaCppEmbeddings`. It then stores the result in a local vector database using `Chroma` vector store.

-- `privateGPT.py` uses a local LLM based on `GPT4All-J` to understand questions and create answers. The context for the answers is extracted from the local vector store using a similarity search to locate the right piece of context from the docs.

+- `privateGPT.py` uses a local LLM based on `GPT4All-J` or `LlamaCpp` to understand questions and create answers. The context for the answers is extracted from the local vector store using a similarity search to locate the right piece of context from the docs.

- `GPT4All-J` wrapper was introduced in LangChain 0.0.162.

# Disclaimer

diff --git a/example.env b/example.env

new file mode 100644

index 0000000..149eca2

--- /dev/null

+++ b/example.env

@@ -0,0 +1,5 @@

+PERSIST_DIRECTORY=db

+LLAMA_EMBEDDINGS_MODEL=models/ggml-model-q4_0.bin

+MODEL_TYPE=GPT4All

+MODEL_PATH=models/ggml-gpt4all-j-v1.3-groovy.bin

+MODEL_N_CTX=1000

\ No newline at end of file

diff --git a/ingest.py b/ingest.py

index ac1921e..2a9a161 100644

--- a/ingest.py

+++ b/ingest.py

@@ -1,19 +1,29 @@

-from langchain.document_loaders import TextLoader

+import os

+from langchain.document_loaders import TextLoader, PDFMinerLoader, CSVLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import Chroma

from langchain.embeddings import LlamaCppEmbeddings

-from sys import argv

from constants import PERSIST_DIRECTORY

from constants import CHROMA_SETTINGS

def main():

+ llama_embeddings_model = os.environ.get('LLAMA_EMBEDDINGS_MODEL')

+ persist_directory = os.environ.get('PERSIST_DIRECTORY')

+ model_n_ctx = os.environ.get('MODEL_N_CTX')

# Load document and split in chunks

- loader = TextLoader(argv[1], encoding="utf8")

+ for root, dirs, files in os.walk("source_documents"):

+ for file in files:

+ if file.endswith(".txt"):

+ loader = TextLoader(os.path.join(root, file), encoding="utf8")

+ elif file.endswith(".pdf"):

+ loader = PDFMinerLoader(os.path.join(root, file))

+ elif file.endswith(".csv"):

+ loader = CSVLoader(os.path.join(root, file))

documents = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=50)

texts = text_splitter.split_documents(documents)

# Create embeddings

- llama = LlamaCppEmbeddings(model_path="./models/ggml-model-q4_0.bin")

+ llama = LlamaCppEmbeddings(model_path=llama_embeddings_model, n_ctx=model_n_ctx)

# Create and store locally vectorstore

db = Chroma.from_documents(texts, llama, persist_directory=PERSIST_DIRECTORY, client_settings=CHROMA_SETTINGS)

db.persist()

diff --git a/privateGPT.py b/privateGPT.py

index a95613b..38fab48 100644

--- a/privateGPT.py

+++ b/privateGPT.py

@@ -2,18 +2,32 @@ from langchain.chains import RetrievalQA

from langchain.embeddings import LlamaCppEmbeddings

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

from langchain.vectorstores import Chroma

-from langchain.llms import GPT4All

-from constants import PERSIST_DIRECTORY

+from langchain.llms import GPT4All, LlamaCpp

+import os

+

+llama_embeddings_model = os.environ.get("LLAMA_EMBEDDINGS_MODEL")

+persist_directory = os.environ.get('PERSIST_DIRECTORY')

+

+model_type = os.environ.get('MODEL_TYPE')

+model_path = os.environ.get('MODEL_PATH')

+model_n_ctx = os.environ.get('MODEL_N_CTX')

+

from constants import CHROMA_SETTINGS

-def main():

- # Load stored vectorstore

- llama = LlamaCppEmbeddings(model_path="./models/ggml-model-q4_0.bin")

- db = Chroma(persist_directory=PERSIST_DIRECTORY, embedding_function=llama, client_settings=CHROMA_SETTINGS)

+def main():

+ llama = LlamaCppEmbeddings(model_path=llama_embeddings_model, n_ctx=model_n_ctx)

+ db = Chroma(persist_directory=persist_directory, embedding_function=llama, client_settings=CHROMA_SETTINGS)

retriever = db.as_retriever()

# Prepare the LLM

callbacks = [StreamingStdOutCallbackHandler()]

- llm = GPT4All(model='./models/ggml-gpt4all-j-v1.3-groovy.bin', backend='gptj', callbacks=callbacks, verbose=False)

+ match model_type:

+ case "LlamaCpp":

+ llm = LlamaCpp(model_path=model_path, n_ctx=model_n_ctx, callbacks=callbacks, verbose=False)

+ case "GPT4All":

+ llm = GPT4All(model=model_path, n_ctx=model_n_ctx, backend='gptj', callbacks=callbacks, verbose=False)

+ case _default:

+ print(f"Model {model_type} not supported!")

+ exit;

qa = RetrievalQA.from_chain_type(llm=llm, chain_type="stuff", retriever=retriever, return_source_documents=True)

# Interactive questions and answers

while True:

@@ -13,26 +13,35 @@ In order to set your environment up to run the code here, first install all requ

pip install -r requirements.txt

```

-Then, download the 2 models and place them in a folder called `./models`:

-- LLM: default to [ggml-gpt4all-j-v1.3-groovy.bin](https://gpt4all.io/models/ggml-gpt4all-j-v1.3-groovy.bin). If you prefer a different GPT4All-J compatible model, just download it and reference it in `privateGPT.py`.

-- Embedding: default to [ggml-model-q4_0.bin](https://huggingface.co/Pi3141/alpaca-native-7B-ggml/resolve/397e872bf4c83f4c642317a5bf65ce84a105786e/ggml-model-q4_0.bin). If you prefer a different compatible Embeddings model, just download it and reference it in `privateGPT.py` and `ingest.py`.

+Rename example.env to .env and edit the variables appropriately.

+```

+MODEL_TYPE: supports LlamaCpp or GPT4All

+PERSIST_DIRECTORY: is the folder you want your vectorstore in

+LLAMA_EMBEDDINGS_MODEL: Path to your LlamaCpp supported embeddings model

+MODEL_PATH: Path to your GPT4All or LlamaCpp supported LLM

+MODEL_N_CTX: Maximum token limit for both embeddings and LLM models

+```

+

+Then, download the 2 models and place them in a directory of your choice (Ensure to update your .env with the model paths):

+- LLM: default to [ggml-gpt4all-j-v1.3-groovy.bin](https://gpt4all.io/models/ggml-gpt4all-j-v1.3-groovy.bin). If you prefer a different GPT4All-J compatible model, just download it and reference it in your `.env` file.

+- Embedding: default to [ggml-model-q4_0.bin](https://huggingface.co/Pi3141/alpaca-native-7B-ggml/resolve/397e872bf4c83f4c642317a5bf65ce84a105786e/ggml-model-q4_0.bin). If you prefer a different compatible Embeddings model, just download it and reference it in your `.env` file.

## Test dataset

This repo uses a [state of the union transcript](https://github.com/imartinez/privateGPT/blob/main/source_documents/state_of_the_union.txt) as an example.

## Instructions for ingesting your own dataset

-Get your .txt file ready.

+Put any and all of your .txt, .pdf, or .csv files into the source_documents directory

-Run the following command to ingest the data.

+Run the following command to ingest all the data.

```shell

-python ingest.py

@@ -13,26 +13,35 @@ In order to set your environment up to run the code here, first install all requ

pip install -r requirements.txt

```

-Then, download the 2 models and place them in a folder called `./models`:

-- LLM: default to [ggml-gpt4all-j-v1.3-groovy.bin](https://gpt4all.io/models/ggml-gpt4all-j-v1.3-groovy.bin). If you prefer a different GPT4All-J compatible model, just download it and reference it in `privateGPT.py`.

-- Embedding: default to [ggml-model-q4_0.bin](https://huggingface.co/Pi3141/alpaca-native-7B-ggml/resolve/397e872bf4c83f4c642317a5bf65ce84a105786e/ggml-model-q4_0.bin). If you prefer a different compatible Embeddings model, just download it and reference it in `privateGPT.py` and `ingest.py`.

+Rename example.env to .env and edit the variables appropriately.

+```

+MODEL_TYPE: supports LlamaCpp or GPT4All

+PERSIST_DIRECTORY: is the folder you want your vectorstore in

+LLAMA_EMBEDDINGS_MODEL: Path to your LlamaCpp supported embeddings model

+MODEL_PATH: Path to your GPT4All or LlamaCpp supported LLM

+MODEL_N_CTX: Maximum token limit for both embeddings and LLM models

+```

+

+Then, download the 2 models and place them in a directory of your choice (Ensure to update your .env with the model paths):

+- LLM: default to [ggml-gpt4all-j-v1.3-groovy.bin](https://gpt4all.io/models/ggml-gpt4all-j-v1.3-groovy.bin). If you prefer a different GPT4All-J compatible model, just download it and reference it in your `.env` file.

+- Embedding: default to [ggml-model-q4_0.bin](https://huggingface.co/Pi3141/alpaca-native-7B-ggml/resolve/397e872bf4c83f4c642317a5bf65ce84a105786e/ggml-model-q4_0.bin). If you prefer a different compatible Embeddings model, just download it and reference it in your `.env` file.

## Test dataset

This repo uses a [state of the union transcript](https://github.com/imartinez/privateGPT/blob/main/source_documents/state_of_the_union.txt) as an example.

## Instructions for ingesting your own dataset

-Get your .txt file ready.

+Put any and all of your .txt, .pdf, or .csv files into the source_documents directory

-Run the following command to ingest the data.

+Run the following command to ingest all the data.

```shell

-python ingest.py